One of the global challenges of the 21st century is to counteract climate change. The success of Germany's contribution, made in form of the climate targets set for 2030 and 2045, is at risk under the current framework conditions [1]. As a countermeasure, the new Energy Efficiency Act (EnEfG) therefore formulates far-reaching requirements and legal stipulations to increase energy efficiency and reduce climate-damaging emissions.

For the first time, the energy-intensive and fast-growing data centre sector is explicitly addressed in order to reduce its specific energy demand and to promote the use of waste heat from data centres. Rightfully so, because with 16 TWh per year, German data centres, for example, require significantly more electrical energy than Berlin [2].

Figure 1 proves for operators and owners: it is worth in many respects to optimise the energy efficiency of their data centres.

Against this background, we present 3 basic measures that can be used to increase the efficiency of data centres.

Figure 1: Driving factors for energy-efficient data centres

Measure 1: Select efficient components

In addition to the pure investment costs, the energy demand during use and thus the operating costs represent a decisive criterion in the selection of components. Components that have a high share of the total energy consumption should be prioritised.

For data centres, this especially concerns:

- the uninterruptible power supply (UPS),

- the power supply units of the servers and

- the compression chiller of the cooling system.

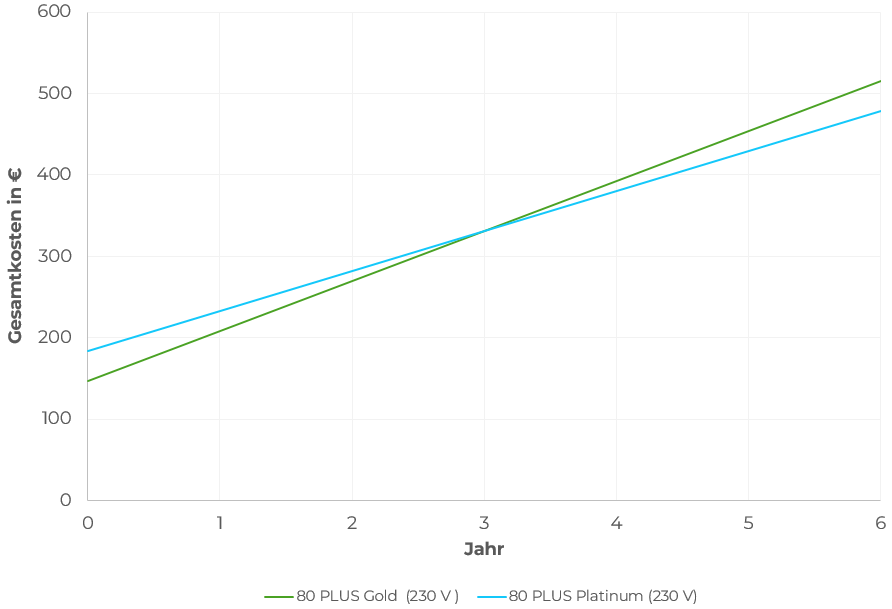

The comparison of the operating and investment costs of two power supply units (see Figure 2) shows that a higher investment in more energy-efficient components makes sense above all if it is recouped within a few years through the operating cost savings.

Figure 2: Comparison of investment and operating costs for various 1000 W power supplies at 20% load, prices as of 08/23

Measure 2: Optimise system for the operating point

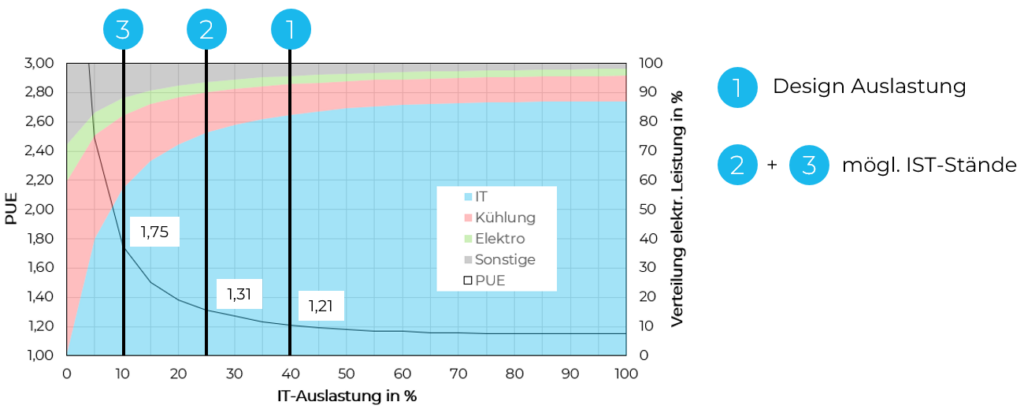

Although 100 percent utilisation of all IT resources in a data centre is theoretically possible, in practice the average utilisation is significantly lower depending on the application scenario. For a cloud data centre, for example, the typical utilisation lies between 20 and 30 percent [3]. Therefore, it is particularly important to design the system for the duty point and not for the full load case. Otherwise, one has to reckon with significant efficiency losses at the duty point (see Figure 3). A high efficiency for the partial load case can be achieved by:

- advantageous control of components, such as speed control of fans and circulating pumps,

or by - modular plants

The latter can be switched on as needed. The high efficiency losses of large units that occur when they have to be heavily regulated down can thus be prevented. Another possible application for optimising partial load efficiency with modularity is the sensible interconnection of components. This includes, for example, the series connection of fans - with which lower speeds can be realised for the fan network for a required air volume flow compared to individual fans. Since the power consumption of fans has a cubic dependence on their speed (P ~ n³), the total energy requirement for air transport can be reduced.

Figure 3: Dependency of PUE on IT load in an example data centre [4]

Measure 3: Reduce energy demand for cooling

In German data centres, the cooling infrastructure is, directly after the IT infrastructure, the area with the highest energy demand [5]. The main cause is the widespread use of compression refrigeration machines. These are used to ensure cooling even at high outside temperatures. If the environment is too warm, the required supply air temperature of the servers cannot be achieved by simply cooling against the outside air.

Instead of moving the data centre to a climatically more favourable region, the following energy-saving measures are also possible:

a) Alternative refrigeration

In addition to refrigeration from compression refrigeration machines, evaporative cooling or adsorption or absorption refrigeration machines can also be used. The former requires water, the latter two require heat at a high temperature level. If these resources are available, it can be evaluated whether compression refrigeration can be replaced.

b) Raise the temperature of the cooling medium

In order for the server waste heat to be released to the outside air, the inlet temperature of the cooling medium must, depending on the cooling concept, sometimes be significantly above the outside air temperature. Consequently, increasing the inlet temperature of the cooling medium is one way to increase the free cooling proportion.

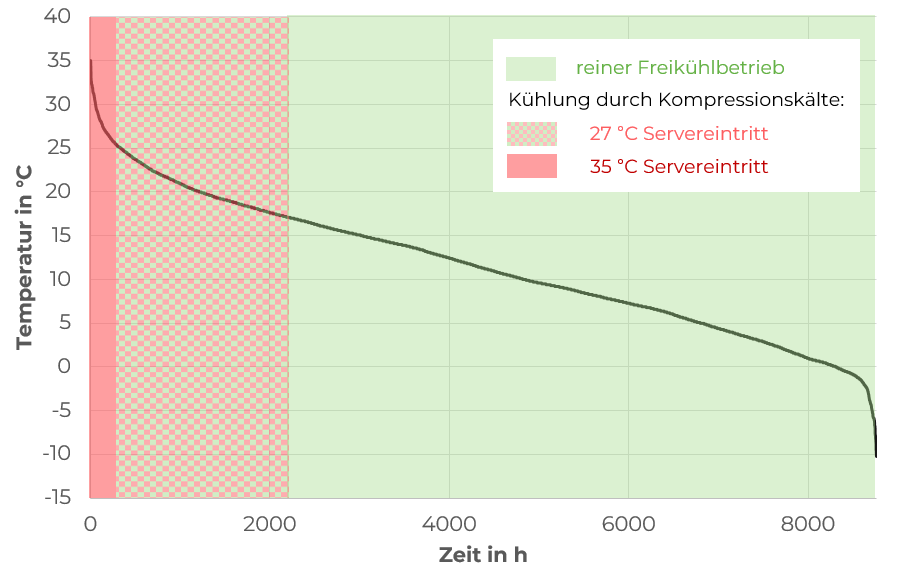

For air-cooled servers, this means that inlet temperatures above 30 °C are also allowed for a few hours per year. The extent to which the specifications of the respective server permit this can be determined from the corresponding ASHRAE class (according to TC 9.9 Reference Card [6]) can be seen. Depending on the classification, servers can be operated at up to 32 °C (A1), 35 °C (A2), 40 °C (A3) or even 45 °C (A4). The extent to which the share of compression cooling can be reduced or the free cooling share can be increased by raising the maximum server inlet temperature from 27 °C to 35 °C (A2) is illustrated in Figure 4. With an increase of the server inlet temperature from 27 °C to 35 °C, the running time of the compression chiller can be reduced from 2100 to 300 hours/year for an examplary data centre located in Dresden. The reduction in operating hours is directly reflected in a reduction of the electricity price-dependent operating costs and thus represents a relevant influencing variable.

As an opposite effect, the increase of the inlet temperature for servers results in a moderately increased electrical energy demand.

This means that even if an energy improvement can usually be achieved with this measure, it must always be checked whether this effect does not exceed the savings in the cooling system.

Figure 4: Increase in the free cooling proportion through higher server inlet temperatures at the Dresden site (without evaporative cooling)

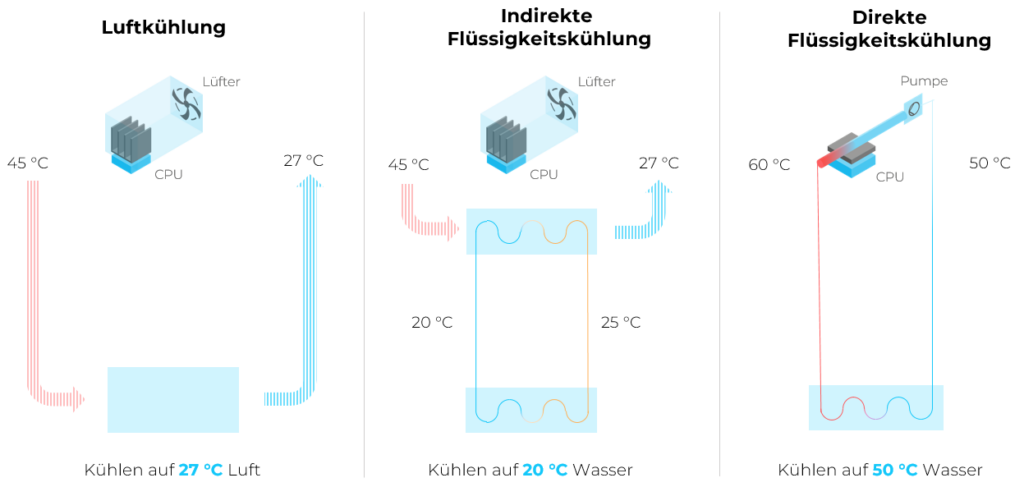

Another way to raise the temperature is to use servers with liquid cooling. Due to the higher density and heat storage capacity, servers can be cooled more efficiently. Compared to air cooling, this allows the temperature of the cooling medium (liquid) to approach the chip temperature while maintaining the cooling effect (see Figure 5).

At the same time, today's servers often allow liquid inlet temperatures of over 50 °C, which means that cooling without compression refrigeration for outside temperatures of up to 45 °C can be realised without any problems. Alternatively, this also increases the probability of finding a suitable heat sink for waste heat utilisation (see point c).

Figure 5: Free cooling potential of indirect and direct liquid and air cooling in comparison

c) Alternative heat sink

To avoid compression cooling, the use of an alternative heat sink is another option. Unlike the outside air, this should have lower temperatures than the cooling medium throughout the year. Under consideration of local environmental regulations, groundwater or river water, for example, can be considered. It is even more benefical to feed it into a local or decentralised heating network that absorbs heat all year round. In this case, the waste heat can even be put to good use.

Conclusion

The measures presented here already enable a first significant increase in the energy efficiency of a data centre and show remote monetary savings potential. However, the interdisciplinary complexity of continuously optimising the energy efficiency of data centres should not be underestimated.

With its experts, Cloud&Heat supports interested parties in the form of individual consulting as well as through feasibility studies and Trainings on the topic of energy efficiency in the data centre.

Sources

[1] https://www.handelsblatt.com/politik/deutschland/klimapolitik-deutschland-duerfte-seine-klimaziele-2030-und-2045-verfehlen/29273700.html

[2] https://www.deutschlandfunk.de/stromverbrauch-digitalisierung-internet-bitcoin-rechenzentren-abwaerme-100.html

[3] https://www.wired.com/2012/10/data-center-servers/

[4] https://www.se.com/ww/en/work/solutions/system/s1/data-center-and-network-systems/trade-off-tools/data-center-efficiency-and-pue-calculator/

[5] Hintemann, R. & Hinterholzer, S. (2023), "Data Centres 2022: Steigender Energie- und Ressourcenbedarf der Rechenzentrumsbranche", Berlin, Borderstep Institut.

[6] ASHRAE (2021), "Thermal Guidelines for Data Processing Environments, Fifth Ed."